Chapter 5: Dependency parsing

The zip archive ai.en.zip contains the text of the Wikipedia article, “Artificial Intelligence”. Apply a dependency parser to the text, and store the result in a file. Implement programs that read the dependency trees and perform the jobs.

For your convenience, the zip archive also includes ai.en.txt.json, the text with dependency trees predicted by Stanford CoreNLP and stored in JSON format.

40. Read the parse result (words)Permalink

Design a class Word that represents a word. This class has three member variables, text (word surface), lemma (lemma), and pos (part-of-speech). Represent a sentence as an array of instances of Word class. Implement a program to load the parse result, and store the text as an array of sentences. Show the object of the first sentence of the body of the article.

41. Read the parse result (dependency)Permalink

In addition to problem 40, add three member variables head (a reference to the object of its syntactic governor), dep (dependency type to its governor), and children (a list of references to the syntactic dependents in the parse tree) to the class Word.

Show the pairs of governors (parents) and their dependents (children) of the first sentence of the body of the article. Use the class Word in the rest of the problems in this chapter.

42. Show root wordsPermalink

For each sentence, extract the root word (whose head is ROOT).

43. Show verb governors and noun dependentsPermalink

Show all pairs of verb governors (parents) and their noun dependents (children) from all sentences in the text.

44. Visualize dependency treesPermalink

Visualize a dependency tree of a sentence as a directed graph. Consider converting a dependency tree into DOT language and use Graphviz for drawing a directed graph. In addition, you can use pydot for drawing a dependency tree.

45. Triple with subject, verb, and direct objectPermalink

We are interested in extracting facts from the text. In this chapter, we represent a fact as a tuple of (subject, predicate, object). Extract tuples from dependency trees where:

- subject is a nominal subject of a verb in the past tense

- predicate is the verb in the past tense

- object is a direct object of the verb

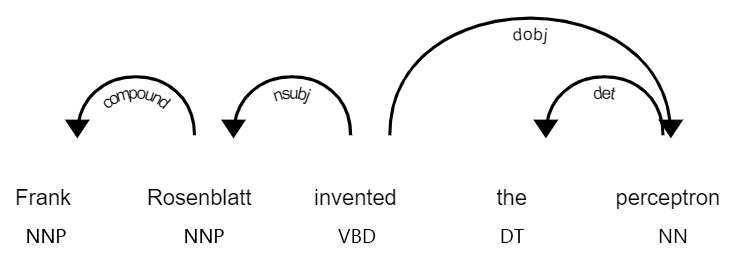

Consider an example sentence, “Frank Rosenblatt invented the perceptron”. We want to extract a tuple, (Rosenblatt, invented, perceptron), from the sentence. In this problem, we only consider a subject and object as a single word.

This graph shows a dependency tree for the sentence (this may vary depending on the parser).

In order to extract a tuple from a dependency tree, it may be a good idea to design an extraction rule on the dependency tree, for example,

{subject}nsubj←{predicate}pos=VBDdobj→{object}46. Expanding subjects and objectsPermalink

Improve the program of Problem 45 to remove the restriction that subjects and objects are single words but can also be phrases. For example, we want to extract (Frank Rosenblatt, invented, perceptron) from the sentence, “Frank Rosenblatt invented the perceptron”.

47. Triple from the passive sentencePermalink

Extract facts from sentences in the passive voice. Consider an example sentence, “Artificial intelligence was founded as an academic discipline in 1955”. We want to extract two tuples from the sentence,

- (Artificial intelligence, founded-as, academic discipline)

- (Artificial intelligence, founded-in, 1955)

48. Extract paths from the root to nounsPermalink

For every noun in a dependency tree, extract a path from the root to the noun. Here, each path must satisfy the following specifications.

- Nodes in a path are words in surface form

- Nodes are connected with “ -> “ from the root to the leaf node

- We don’t have to include dependency types (e.g., nsubj, dobj) when representing a dependency path.

For the example sentence, “Frank Rosenblatt invented the perceptron”, we expect an output,

invented -> Rosenblatt

invented -> Rosenblatt -> Frank

invented -> perceptron

49. Extract the shortest path between two nounsPermalink

Extract the shortest path for every pair of two nouns. Supposing that two nouns appear at the i-th and j-th positions (in words) in a sentence (i<j), the shortest path must satisfy the following specifications.

- Nodes in a path are words in surface form

- Nodes corresponding to the i-th and j-th words are replaced with X and Y, respectively.

- Nodes are connected with either “ -> “ or “ <- “ from X to Y to represent a direction of a dependency.

We can consider two types of dependency paths.

- When the j-th word appears on the path from the i-th word to the root: the path from the i-th word to the j-th word

- When the i-th and j-th words have the common ancestor (the k-th word) in the dependency tree: the path from the i-th word to the k-th word connected with “ <- “, followed by the path from the k-th word to the j-th word connected with “ -> “.

For the example sentence, “Frank Rosenblatt invented the perceptron”, we expect an output,

X <- Y

X <- invented -> Y

X <- Rosenblatt <- invented -> Y